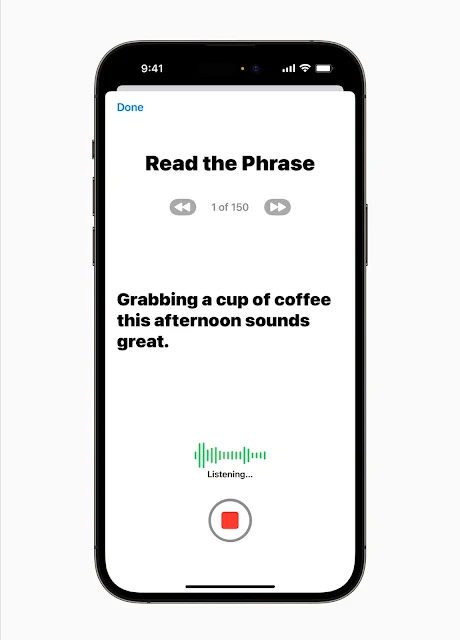

Apple has announced that by the end of the year, users of iPhones and iPads will be able to hear their devices speaking in their own voice as part of a collection of new bundle of software features for cognitive, speech, vision, and mobility accessibility, the tech giant provided a preview of 'Personal Voice' on May 16th. This upcoming feature will display random text prompts to generate 15 minutes audio and capture user's voice.

Furthermore, Apple's 'Live Speech' tool will allow users to save commonly used phrases, which can then be spoken by the device on their behalf during phone calls, FaceTime conversations, and in-person interactions.

In a press release, Apple previewed software features for cognitive, vision, hearing, and mobility accessibility, along with innovative tools for individuals who are nonspeaking or at risk of losing their ability to speak.

For people with cognitive disabilities, Apple's "Assistive Access" uses innovations in design to distill apps and experiences to their essential features in order to lighten cognitive load.

For users at risk of losing their ability to speak — such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability — Personal Voice is a simple and secure way to create a voice that sounds like them.

Users can create a Personal Voice by reading along with a randomised set of text prompts to record 15 minutes of audio on iPhone or iPad. This speech accessibility feature uses on-device Machine Learning to keep users’ information private and secure, and integrates seamlessly with Live Speech so users can speak with their Personal Voice when connecting with loved ones.

Furthermore, Apple's 'Live Speech' tool will allow users to save commonly used phrases, which can then be spoken by the device on their behalf during phone calls, FaceTime conversations, and in-person interactions.

In a press release, Apple previewed software features for cognitive, vision, hearing, and mobility accessibility, along with innovative tools for individuals who are nonspeaking or at risk of losing their ability to speak.

For people with cognitive disabilities, Apple's "Assistive Access" uses innovations in design to distill apps and experiences to their essential features in order to lighten cognitive load.

For users at risk of losing their ability to speak — such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability — Personal Voice is a simple and secure way to create a voice that sounds like them.

Users can create a Personal Voice by reading along with a randomised set of text prompts to record 15 minutes of audio on iPhone or iPad. This speech accessibility feature uses on-device Machine Learning to keep users’ information private and secure, and integrates seamlessly with Live Speech so users can speak with their Personal Voice when connecting with loved ones.

Personal Voice can be created using iPhone, iPad, and Mac with Apple silicon, and will be available in English.

In addition, Apple highlighted a number of other features coming to the Mac as well, including a way for deaf or hard-of-hearing users to pair Made for iPhone hearing devices with a Mac. The company is also adding an easier way to adjust the size of the text in Finder, Messages, Mail, Calendar, and Notes on Mac.

For users who are blind or have low vision, Apple is introducing "Detection Mode in Magnifier", which offers Point and Speak, which identifies text users point toward and reads it out loud to help them interact with physical objects such as household appliances.

Point and Speak in Magnifier makes it easier for users with vision disabilities to interact with physical objects that have several text labels. For example, while using a household appliance — such as a microwave — Point and Speak combines input from the camera, the LiDAR Scanner, and on-device machine learning to announce the text on each button as users move their finger across the keypad. Point and Speak is built into the Magnifier app on iPhone and iPad.

Point and Speak will be available on iPhone and iPad devices with the LiDAR Scanner in English, French, Italian, German, Spanish, Portuguese, Chinese, Cantonese, Korean, Japanese, and Ukrainian.

IndianWeb2.com is an independent digital media platform for business, entrepreneurship, science, technology, startups, gadgets and climate change news & reviews.

IndianWeb2.com is an independent digital media platform for business, entrepreneurship, science, technology, startups, gadgets and climate change news & reviews.

No comments

Post a Comment